Linear Regression Health Insurance Cost Model with Different M.L. Algorithms.

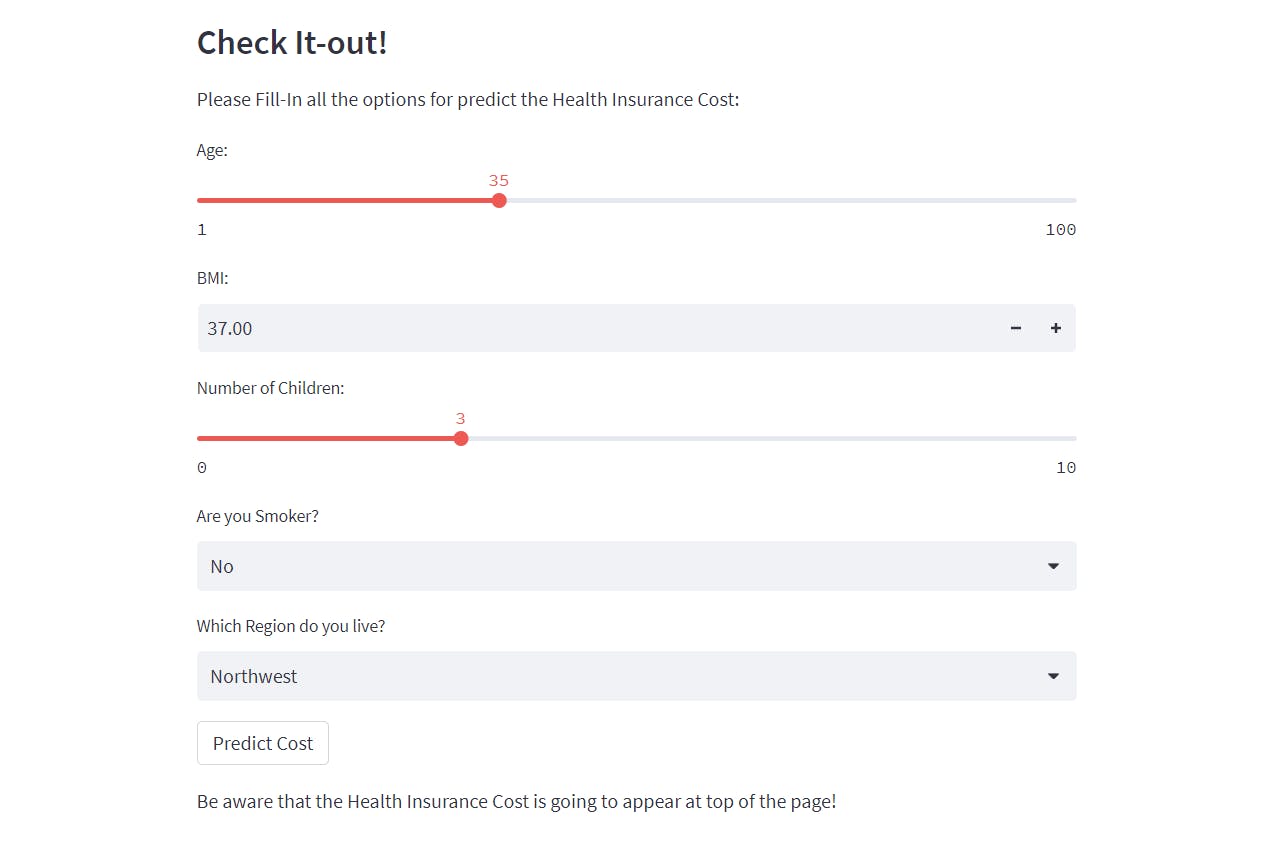

The Model predicts the Health Insurance Cost of a Person in $ Dollars, using Features like Age, BMI, Number of Children, Region and if the person is a Smoker.

The Machine Learning Algorithms that were used for the Health Insurance Dataset were:

XGB RegressorNeural NetworkRandom ForestSckit-Learn Linear Regression Model

Modules

import numpy as np

import pandas as pd

from sklearn.preprocessing import LabelEncoder

import matplotlib.pyplot as plt

import sklearn

from sklearn.compose import ColumnTransformer

from sklearn.preprocessing import StandardScaler

import tensorflow as tf

from sklearn.model_selection import train_test_split

from sklearn.metrics import r2_score

from sklearn.dummy import DummyRegressor

import pickle as pkl

Loading the Dataset

The Dataset is at the bottom of the page.

dataset = pd.read_csv("./insurance.csv")

X = dataset.iloc[:, 0:-1]

y = dataset.iloc[:, -1]

Preprocessing Data

X = X.drop(columns=["sex"])

X.smoker = X.smoker.replace({"yes": 1, "no" : 0})

X = pd.get_dummies(X)

Applying Standard Scaler

X_Train, X_Test, Y_Train, Y_Test = train_test_split(X, y, test_size=0.2, random_state=100)

numerical_features = X.select_dtypes(include=['float64', 'int64'])

numerical_columns = numerical_features.columns

ct = ColumnTransformer([("only numeric", StandardScaler(), numerical_columns)], remainder='passthrough')

X_Train = ct.fit_transform(X_Train)

X_Test = ct.transform(X_Test)

Random Forest

The number of Trees at the Random Forest is going to test it between 50 and 500, to see which has the best MAE result.

from sklearn.ensemble import RandomForestRegressor

from sklearn.metrics import mean_absolute_error

def get_score(n_estimators) :

model = RandomForestRegressor(n_estimators=n_estimators, random_state=100)

model.fit(X_Train, Y_Train)

y_pred = model.predict(X_Test)

MAE = mean_absolute_error(Y_Test, y_pred)

return MAE.mean()

random_forest_tree_numbers = [50, 100, 150, 200, 250, 300, 350, 400, 450, 500]

results = {}

for i in random_forest_tree_numbers :

result = get_score(i)

results[i] = result

plt.plot(list(results.keys()), list(results.values()))

plt.show()

It seems that the Random Forest with 50 Trees has the best performance, and now is going to be trained with the data.

best_random_forest = RandomForestRegressor(n_estimators=50, random_state=100)

best_random_forest.fit(X_Train, Y_Train)

y_pred = best_random_forest.predict(X_Test)

print("Test Score:" + str(best_random_forest.score(X_Test, Y_Test)))

MAE = mean_absolute_error(Y_Test, y_pred)

print("MAE: " + str(MAE))

print("R2 Score: " + str(r2_score(Y_Test, y_pred)))

XGBRegressor Model

from xgboost import XGBRegressor

xgb_model = XGBRegressor(n_estimators=50, learning_rate=0.05)

xgb_model.fit(X_Train, Y_Train,

eval_set=[(X_Test, Y_Test)],

verbose=False)

predictions = xgb_model.predict(X_Test)

print("Test Score: " + str(xgb_model.score(X_Test, Y_Test)))

print("MAE: " + str(mean_absolute_error(Y_Test, predictions)))

print("R2 Score: " + str(r2_score(Y_Test, predictions)))

Linear Regression Sckit-Learn Model

from sklearn.linear_model import LinearRegression

simple_model = LinearRegression()

simple_model.fit(X_Train, Y_Train)

y_pred = simple_model.predict(X_Test)

print("Test Score:" + str(simple_model.score(X_Test, Y_Test)))

MAE = mean_absolute_error(Y_Test, y_pred)

print("MAE: " + str(MAE))

print("R2 Score: " + str(r2_score(Y_Test, y_pred)))

Dummy Regressor Model

dummy_regr = DummyRegressor(strategy="median")

dummy_regr.fit(X_Train, Y_Train)

y_pred = dummy_regr.predict(X_Test)

MAE_baseline = mean_absolute_error(Y_Test, y_pred)

print("MAE: " + str(MAE_baseline))

print("R2 Score: " + str(r2_score(Y_Test, y_pred)))

Neural Network Model

num_features = X.shape[1]

nn_model = tf.keras.Sequential()

nn_model.add(tf.keras.layers.InputLayer(input_shape=(num_features,)))

nn_model.add(tf.keras.layers.Dense(units=128, kernel_regularizer=tf.keras.regularizers.L2(l2=0.001), activation="relu", kernel_initializer="he_normal"))

nn_model.add(tf.keras.layers.Dropout(rate=0.4))

nn_model.add(tf.keras.layers.Dense(units=64, kernel_regularizer=tf.keras.regularizers.L2(l2=0.001), activation="relu", kernel_initializer="he_normal"))

nn_model.add(tf.keras.layers.Dropout(rate=0.2))

nn_model.add(tf.keras.layers.Dense(units=32, kernel_regularizer=tf.keras.regularizers.L2(l2=0.001), activation="relu", kernel_initializer="he_normal"))

nn_model.add(tf.keras.layers.Dense(units=1))

nn_model.compile(

optimizer=tf.keras.optimizers.Adam(learning_rate=0.01),

loss="mean_absolute_error",

metrics=["mae"]

)

history = nn_model.fit(

X_Train, Y_Train,

batch_size=8,

epochs=100, verbose=0)

y_pred = nn_model.predict(X_Test)

MAE = mean_absolute_error(Y_Test, y_pred)

print("MAE: " + str(MAE))

print("R2 Score: " + str(r2_score(Y_Test, y_pred)))

Overall, all the previous Models did a very good performance, but the XGB Regressor Model was selected for the Test, with a MAE of 2310 and R2 Score of 0.88.

Check it out the XGB Regressor Model with Streamlit, this is a friendly framework that allows Data Science and Machine Learning Engineers to prove their models in browsers, without knowing frontend technologies like HTML, CSS and JavaScript.

The code of the Streamlit page is at the bottom of the page. Be sure to run the following command to run the Streamlit server:

streamlit run main.py